This section provides general guidance for scaling operations of Cassandra by making Cassandra on Apigee Edge for Private Cloud rack aware.

For more information on why making your Cassandra ring rack aware is important, see the following resources:

- Replication (Cassandra documentation)

- Cassandra Architecture & Replication Factor Strategy

What is a rack?

A Cassandra rack is a logical grouping of Cassandra nodes within the ring. Cassandra uses racks so that it can ensure replicas are distributed among different logical groupings. As a result, operations are sent to not just one node, but multiple nodes, each on a separate rack, providing greater fault tolerance and availability.

The examples in this section use three Cassandra racks, which is the number of racks that are supported by Apigee in production topologies.

The default installation of Cassandra in Apigee Edge for Private Cloud assumes a single logical rack and places all the nodes in a data center within it. Although this configuration is simple to install and manage, it is susceptible to failure if an operation fails on one of those nodes.

The following image shows the default configuration of the Cassandra ring:

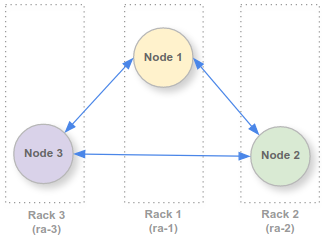

In a more robust configuration, each node would be assigned to a separate rack and operations would also execute on replicas on each of those racks.

The following image shows a 3-node ring. This image shows the order in which operations are replicated across the ring (clockwise) and highlights the fact that no two nodes are on the same rack:

In this configuration, operations are sent to a node but are also sent to replicas of that node on other racks (in clockwise order).

Add rack awareness (with 3 nodes)

All production Installation topologies of Apigee Edge for Private Cloud have at least three Cassandra nodes, which this section refers to as "IP1", "IP2", and "IP3". By default, each of these nodes is in the same rack, "ra-1".

This section describes how to assign the Cassandra nodes to separate racks so that all operations are sent to replica nodes in separate logical groupings within the ring.

To assign Cassandra nodes to different racks during installation:

- Before you run the installer, log into the Cassandra node and open the

following silent configuration file for edit:

/opt/silent.conf

Create the file if it does not exist and be sure to make the "apigee" user an owner.

- Edit the

CASS_HOSTSproperty, a space-separated list of IP addresses (not DNS or hostname entries) that uses the following syntax:CASS_HOSTS="IP_address:data_center_number,rack_number [...]"

The default value is a three node Cassandra ring with each node assigned to rack 1 and data center 1, as the following example shows:

CASS_HOSTS="IP1:1,1 IP2:1,1 IP3:1,1"

- Change the rack assignments so that node 2 is assigned to rack 2 and node 3 is assigned to

rack 3, as the following example shows:

CASS_HOSTS="IP1:1,1 IP2:1,2 IP3:1,3"

By changing the rack assignments, you instruct Cassandra to create two additional logical groupings (racks), which then provide replicas that receive all operations received by the first node.

For more information on using the

CASS_HOSTSconfiguration property, see Edge Configuration File Reference. - Save your changes to the configuration file and execute the following command to install

Cassandra with your updated configuration:

/opt/apigee/apigee-setup/bin/setup.sh -p c -f path/to/silent/config

For example:

/opt/apigee/apigee-setup/bin/setup.sh -p c -f /opt/silent.conf

- Repeat this procedure for each Cassandra node in the ring, in the order in which the nodes

are assigned in the

CASS_HOSTSproperty. In this case, you must install Cassandra in the following order:- Node 1 (IP1)

- Node 2 (IP2)

- Node 3 (IP3)

After installation, you should Check the Cassandra configuration.

Check the Cassandra configuration

After installing a rack-aware Cassandra configuration, you can check that the nodes are

assigned to the different racks by using the nodetool status command, as the

following example shows:

/opt/apigee/apigee-cassandra/bin/nodetool status

(You execute this command on one of the Cassandra nodes.)

The results should look similar to the following, where the Rack column shows the different rack IDs for each node:

Datacenter: dc-1 ======================== Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns Host ID Rack UN IP1 737 MB 256 ? 554d4498-e683-4a53-b0a5-e37a9731bc5c ra-1 UN IP2 744 MB 256 ? cf8b7abf-5c5c-4361-9c2f-59e988d52da3 ra-2 UN IP3 723 MB 256 ? 48e0384d-738f-4589-aa3a-08dc5bd5a736 ra-3

If you enabled JMX authentication for Cassandra, you must also pass your username and

password to nodetool. For more information, see

Use nodetool to manage cluster nodes.

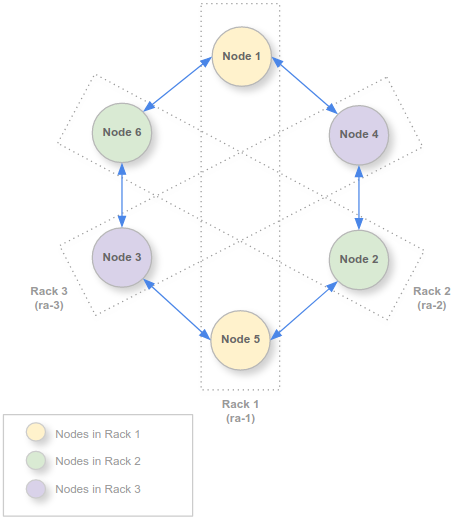

Install a six-node ring

For additional redundancy, you can expand the Cassandra ring to six nodes. In this case, you assign two nodes to each of the three racks. This configuration requires an additional three nodes: Node 4 (IP4), Node 5 (IP5), and Node 6 (IP6).

The following image shows the order in which operations are replicated across the ring (clockwise) and highlights the fact that during replication, no two adjacent nodes are on the same rack:

In this configuration, each node has two more replicas: one in each of the other two racks. For example, node 1 in rack 1 has a replica in Rack 2 and Rack 3. Operations sent to node 1 are also sent to the replicas in the other racks, in clockwise order.

To expand a three-node Cassandra ring to a six-node Cassandra ring, configure the nodes in the following way in your silent configuration file:

CASS_HOSTS="IP1:1,1 IP4:1,3 IP2:1,2 IP5:1,1 IP3:1,3 IP6:1,2"

As with a three-node ring, you must install Cassandra in the same order in which the nodes

appear in the CASS_HOSTS property:

- Node 1 (IP1)

- Node 4 (IP4)*

- Node 2 (IP2)

- Node 5 (IP5)

- Node 3 (IP3)

- Node 6 (IP6)

* Make your changes in the silent configuration file before running the setup utility on the fourth node (the second machine in the Cassandra installation order).

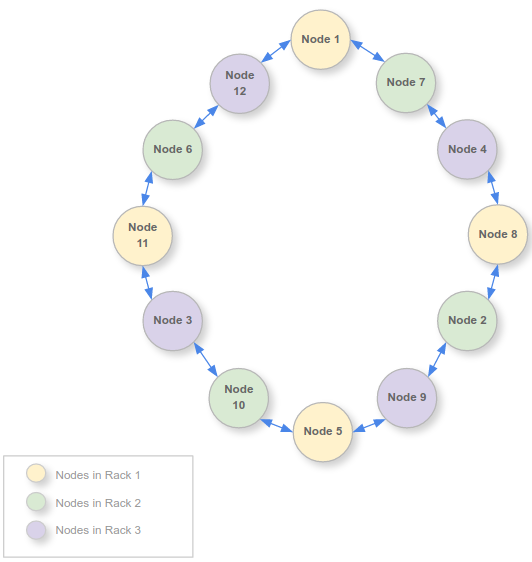

Expand to 12 nodes

To further increase fault tolerance and availability, you can increase the number of Cassandra nodes in the ring from six to 12. This configuration requires an additional six nodes (IP7 through IP12).

The following image shows the order in which operations are replicated across the ring (clockwise) and highlights the fact that during replication, no two adjacent nodes are on the same rack:

The procedure for installing a 12-node ring is similar to installing a three or six node ring:

set CASS_HOSTS to the given values and run the installer in the specified order.

To expand to a 12-node Cassandra ring, configure the nodes in the following way in your silent configuration file:

CASS_HOSTS="IP1:1,1 IP7:1,2 IP4:1,3 IP8:1,1 IP2:1,2 IP9:1,3 IP5:1,1 IP10:1,2 IP3:1,3 IP11:1,1 IP6:1,2 IP12:1,3"

As with a three- and six-node rings, you must execute the installer on nodes in the order in which the nodes appear in the configuration file:

- Node 1 (IP1)

- Node 7 (IP7)*

- Node 4 (IP4)

- Node 8 (IP8)

- Node 2 (IP2)

- Node 9 (IP9)

- Node 5 (IP5)

- Node 10 (IP10)

- Node 3 (IP3)

- Node 11 (IP11)

- Node 6 (IP6)

- Node 12 (IP12)

* You must make these changes before installing Apigee Edge for Private Cloud on the 7th node (the second machine in the Cassandra installation order).