You're viewing Apigee Edge documentation.

Go to the

Apigee X documentation. info

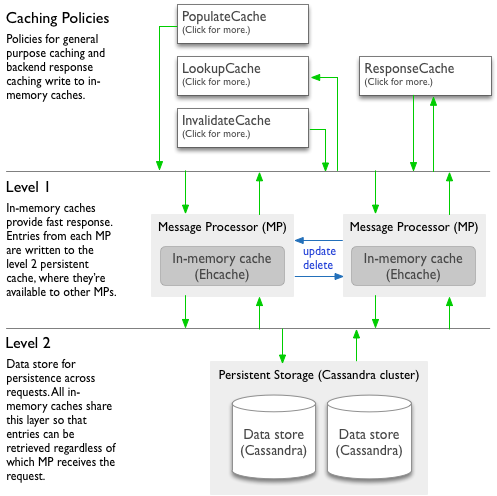

This topic describes the workings of the cache beneath policies such as the Populate Cache policy, LookupCache policy, InvalidateCache policy, and Response Cache policy.

Shared and environment caches

Each caching policy you configure can use one of two cache types: an included shared cache that your applications have access to and one or more environment-scoped caches that you create.

-

Shared cache: By default, your proxies have access to one shared cache in

each environment. The shared cache works well for basic use cases.

You can work with the shared cache only by using caching policies, not the management API. To have a caching policy use the shared cache, simply omit the policy's

<CacheResource>element. -

Environment cache: When you want to configure cache properties with values you

choose, you can create an environment-scoped cache. For more about creating a cache, see

Creating and editing an environment cache.

When you create an environment cache, you configure its default properties. You can have a caching policy use the environment cache by specifying the cache name in the policy's

<CacheResource>element.

About cache encryption

Edge for Public Cloud: Cache is encrypted only in PCI- and HIPAA-enabled organizations. Encryption for those organizations is configured during organization provisioning.

In-memory and persistent cache levels

Both the shared and environment caches are built on a two-level system made up of an in-memory level and a persistent level. Policies interact with both levels as a combined framework. Edge manages the relationship between the levels.

-

Level 1 is an in-memory cache (L1) for fast access. Each message processing node (MP)

has its own in-memory cache (implemented from Ehcache) for the fastest response to requests.

- On each node, a certain percentage of memory is reserved for use by the cache.

- As the memory limit is reached, Apigee Edge removes cache entries from memory (though they are still kept in the L2 persistent cache) to ensure that memory remains available for other processes.

- Entries are removed in the order of time since last access, with the oldest entries removed first.

- These caches are also limited by the number of entries in the cache.

-

Level 2 is a persistent cache (L2) beneath the in-memory cache. All message processing

nodes share a cache data store (Cassandra) for persisting cache entries.

- Cache entries persist here even after they're removed from L1 cache, such as when in-memory limits are reached.

- Because the persistent cache is shared across message processors (even in different regions), cache entries are available regardless of which node receives a request for the cached data.

- Only entries of a certain size may be cached, and other cache limits apply. See Managing cache limits.

You might also be interested in Apigee Edge Caching In Detail, on the Apigee Community.

How policies use the cache

The following describes how Apigee Edge handles cache entries as your caching policies do their work.

- When a policy writes a new entry to the cache (PopulateCache or

ResponseCache policy):

- Edge writes the entry to the in-memory L1 cache on only the message processor that handled the request. If the memory limits on the message processor are reached before the entry expires, the Edge removes the entry from L1 cache.

- Edge also writes the entry to L2 cache.

- When a policy reads from the cache (LookupCache or ResponseCache policy):

- Edge looks first for the entry in the in-memory L1 cache of the message processor handling the request.

- If there's no corresponding in-memory entry, Edge looks for the entry in the L2 persistent cache.

- If the entry isn't in the persistent cache:

- LookupCache policy: No value is retrieved from the cache.

- ResponseCache policy: Edge returns the actual response from the target to the client and stores the entry in cache until it expires or is invalidated.

- When a policy updates or invalidates an existing

cache entry (InvalidateCache, PopulateCache, or ResponseCache policy):

- The message processor receiving the request sends a broadcast to update or delete the

entry in L1 cache on itself and all other message processors in all regions.

- If the broadcast succeeds, each receiving message processor updates or removes the entry in L1 cache.

- If the broadcast fails, the invalidated cache value remains in L1 cache on the message processors that didn't receive the broadcast. Those message processors will have stale data in L1 cache until the entry's time-to-live (TTL) expires or is removed when message processor memory limits are reached.

- The broadcast also updates or deletes the entry in L2 cache.

- The message processor receiving the request sends a broadcast to update or delete the

entry in L1 cache on itself and all other message processors in all regions.

Managing cache limits

Through configuration, you can manage some aspects of the cache. The overall space available for in-memory cache is limited by system resources and is not configurable. The following constraints apply to cache:

- Cache limits: Various cache limits apply, such as name and value size, total number of caches, the number of items in a cache, and expiration.

-

In-memory (L1) cache. Memory limits for your cache are not configurable. Limits are

set by Apigee for each message processor that hosts caches for multiple customers.

In a hosted cloud environment, where in-memory caches for all customer deployments are hosted across multiple shared message processors, each processor features an Apigee-configurable memory percentage threshold to ensure that caching does not consume all of the application's memory. As the threshold is crossed for a given message processor, cache entries are evicted from memory on a least-recently-used basis. Entries evicted from memory remain in L2 cache until they expire or are invalidated.

- Persistent (L2) cache. Entries evicted from the in-memory cache remain in the persistent cache according to configurable time-to-live settings.

Configurable optimizations

The following table lists settings you can use to optimize cache performance. You can specify values for these settings when you create a new environment cache, as described in Creating and editing an environment cache.

| Setting | Description | Notes |

|---|---|---|

| Expiration | Specifies the time to live for cache entries. | None. |