You're viewing Apigee Edge documentation.

Go to the

Apigee X documentation. info

You can use Edge Microgateway to provide Apigee API management for services running in a Kubernetes cluster. This topic explains why you might want to deploy Edge Microgateway on Kubernetes, and it describes how to deploy Edge Microgateway to Kubernetes as a service.

Use case

Services deployed to Kubernetes commonly expose APIs, either to external consumers or to other services running within the cluster.

In either case, there is an important problem to solve: How will you manage these APIs? For example:

- How will you secure them?

- How will you manage traffic?

- How will you gain insight into traffic patterns, latencies, and errors?

- How will you publish your APIs so developers can discover and use them?

Whether you are migrating existing services and APIs to the Kubernetes stack or are creating new services and APIs, Edge Microgateway helps provide a clean API management experience that includes security, traffic management, analytics, publishing, and more.

Running Edge Microgateway as a service

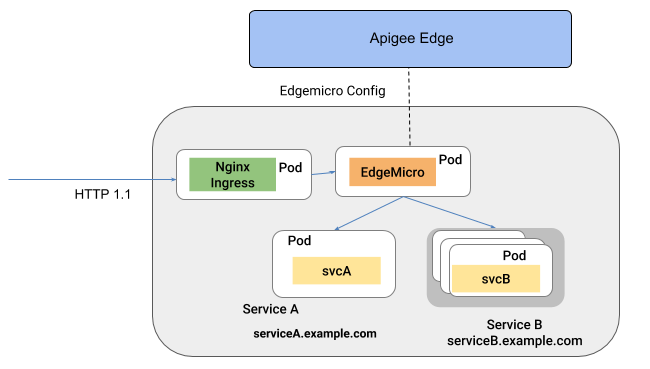

When deployed to Kubernetes as a service, Edge Microgateway runs in its own pod. In this architecture, Edge Microgateway intercepts incoming API calls and routes them to one or more target services running in other pods. In this configuration, Edge Microgateway provides API management features such as security, analytics, traffic management, and policy enforcement to the other services.

The following figure illustrates the architecture where Edge Microgateway runs as a service in a Kubernetes cluster:

See Deploy Edge Microgateway as a service in Kubernetes.

Next step

- To learn how to run Edge Microgateway as a service in Kubernetes, see Deploy Edge Microgateway as a service in Kubernetes.