Edge for Private Cloud v. 4.16.09

ZooKeeper ensembles are designed to remain functional, with no data loss, despite the loss of one or more ZooKeeper nodes. This resilience can be used effectively to perform maintenance on ZooKeeper nodes with no system downtime.

About ZooKeeper and Edge

In Edge, ZooKeeper nodes contain configuration data about the location and configuration of the various Edge components, and notifies the different components of configuration changes. All supported Edge topologies for a production system specify to use at least three ZooKeeper nodes.

Use the ZK_HOSTS and ZK_CLIENT_HOSTS properties in the Edge config file to specify the ZooKeeper nodes. For exam

ZK_HOSTS="$IP1 $IP2 $IP3" ZK_CLIENT_HOSTS="$IP1 $IP2 $IP3"

where:

- ZK_HOSTS - Specifies

the IP addresses of the ZooKeeper nodes. The IP addresses must be listed in the same order on

all ZooKeeper nodes.

In a multi-Data Center environment, list all ZooKeeper nodes from all Data Centers. - ZK_CLIENT_HOSTS -

Specifies the IP addresses of the ZooKeeper nodes used by this Data Center only. The IP

addresses must be listed in the same order on all ZooKeeper nodes in the Data Center.

In a single Data Center installation, these are the same nodes as specified by ZK_HOSTS. In a multi-Data Center environment, the Edge config file for each Data Center should list only the ZooKeeper nodes for that Data Center

By default, all ZooKeeper nodes are designated as voter nodes. That means the nodes all participate in electing the ZooKeeper leader. You can include the “:observer” modifier with ZK_HOSTS to signify that the note is an observer node, and not a voter. An observer node does not participate in the election of the leader.

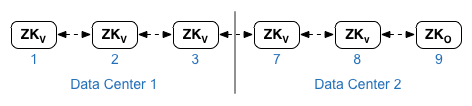

You typically specify the “:observer” modifier when creating multiple Edge Data Centers, or when a single Data Center has a large number of ZooKeeper nodes. For example, in a 12-host Edge installation with two Data Centers, ZooKeeper on node 9 in Data Center 2 is the observer:

You then use the following settings in your config file for Data Center 1:

ZK_HOSTS="$IP1 $IP2 $IP3 $IP7 $IP8 $IP9:observer" ZK_CLIENT_HOSTS="$IP1 $IP2 $IP3"

And for Data Center

ZK_HOSTS="$IP1 $IP2 $IP3 $IP7 $IP8 $IP9:observer" ZK_CLIENT_HOSTS="$IP7 $IP8 $IP9"

About leaders, followers, voters, and observers

In a multi-node ZooKeeper installation, one of the nodes is designated as the leader. All other ZooKeeper nodes are designated as followers. While reads can happen from any ZooKeeper node, all write requests get forwarded to the leader. For example, a new Message Processor is added to Edge. That information is written to the ZooKeeper leader. All followers then replicate the data.

At Edge installation time, you designate each ZooKeeper node as a voter or as an observer. The leader is then elected by all voter ZooKeeper nodes. The one requirement for the election of a leader is that there must be a quorum of functioning ZooKeeper voter nodes available. A quorum means more than half of all voter ZooKeeper nodes, across all Data Centers, are functional.

If there is not a quorum of voter nodes available, no leader can be elected. In this scenario, Zookeeper cannot serve requests. That means you cannot make a request to the Edge Management Server, process Management API requests, or log in to the to Edge UI until the quorum is restored.

For example, in a single Data Center installation:

- You installed three ZooKeeper nodes

- All ZooKeeper nodes are voters

- The quorum is two functioning voter nodes

- If only one voter node is available then the ZooKeeper ensemble cannot function

In an installation with two Data Centers:

- You installed three ZooKeeper nodes per Data Center, for a total of six nodes

- Data Center 1 has three voter nodes

- Data Center 2 has two voter nodes and one observer node

- The quorum is based on the five voters across both Data Centers, and is therefore three functioning voter nodes

- If only two or fewer voter nodes are available then the ZooKeeper ensemble cannot function

Adding nodes as voters or observers

Your system requirements might require that you add additional ZooKeeper nodes to your Edge installation. The Adding ZooKeeper nodes documentation describes how to add additional ZooKeeper nodes to Edge. When adding ZooKeeper nodes, you must take into consideration the type of nodes to add: voter or observer.

You want to make sure to have enough voter nodes so that if one or more voter nodes are down that the ZooKeeper ensemble can still function, meaning there is still a quorum of voter nodes available. By adding voter nodes, you increase the size of the quorum, and therefore you can tolerate more voter nodes being down.

However, adding additional voter nodes can negatively affect write performance because write operations require the quorum to agree on the leader. The time it takes to determine the leader is based on the number of voter nodes, which increases as you add more voter nodes. Therefore, you do not want to make all the nodes voters.

Rather than adding voter nodes, you can add observer nodes. Adding observer nodes increases the overall system read performance without adding to the overhead of electing a leader because observer nodes do not vote and do not affect the quorum size. Therefore, if an observer node goes down, it does not affect the ability of the ensemble to elect a leader. However, losing observer nodes can cause degradation in the read performance of the ZooKeeper ensemble because there are fewer nodes available to service data requests.

In a single Data Center, Apigee recommends that you have no more than five voters regardless of the number of observer nodes. In two Data Centers, Apigee recommends that you have no more than nine voters (five in one Data Center and four in the other). You can then then add as many observer nodes as necessary for your system requirements.

Maintenance Considerations

ZooKeeper maintenance can be performed on a fully functioning ensemble with no downtime if it is performed on a single node at a time. By making sure that only one ZooKeeper node is down at any one time, you can ensure that there is always a quorum of voter nodes available to elect a leader.

Maintenance across Multiple Data Centers

When working with multiple Data Centers, remember that the ZooKeeper ensemble does not distinguish between Data Centers. ZooKeeper assemblies view all of the ZooKeeper nodes across all Data Centers as one ensemble.

The location of the voter nodes in a given Data Center is not a factor when ZooKeeper performs quorum calculations. Individual nodes can go down across Data Centers, but as long as a quorum is preserved across the entire ensemble then ZooKeeper remains functional.

Maintenance Implications

At various times, you will have to take a ZooKeeper node down for maintenance, either a voter node or an observer node. For example, you might have to upgrade the version of Edge on the node, the machine hosting ZooKeeper might fail, or the node might become unavailable for some other reason such as a network error.

If the node that goes down is an observer node, then you can expect a slight degradation in the performance of the ZooKeeper ensemble until the node is restored. If the node is a voter node, it can impact the viability of the ZooKeeper ensemble due to the loss of a node that participates in the leader election process. Regardless of the reason for the voter node going down, it is important to maintain a quorum of available voter nodes.

Maintenance Procedure

You should consider performing any maintenance procedure only after ensuring that a ZooKeeper ensemble is functional. This assumes that observer nodes are functional and that there are enough voter nodes available during maintenance to retain a quorum.

When these conditions are met, then a ZooKeeper ensemble of arbitrary size can tolerate the loss of a single node at any point with no loss of data or meaningful impact to performance. This means you are free to perform maintenance on any node in the ensemble as long as it is on one node at a time.

As part of performing maintenance, use the following procedure to determine the type of a ZooKeeper node (leader, voter, or observer):

- If it is not installed on the ZooKeeper node, install nc:

> sudo yum install nc - Run the following nc command on the node:

> echo stat | nc localhost 2181

Where 2181 is the ZooKeeper port. You should see output on the form:

Zookeeper version: 3.4.5-1392090, built on 09/30/2012 17:52 GMT

Clients: /a.b.c.d:xxxx[0](queued=0,recved=1,sent=0)

Latency min/avg/max: 0/0/0

Received: 1

Sent: 0

Connections: 1

Outstanding: 0

Zxid: 0xc00000044

Mode: follower

Node count: 653

In the Mode line of the output for the nodes, you should see observer, leader, or follower (meaning a voter that is not the leader) depending on the node configuration.

Note: In a standalone installation of Edge with a single ZooKeeper node, the Mode is set to standalone. - Repeat steps 1 and 2 on each ZooKeeper node.

Summary

The best way to perform maintenance on a ZooKeeper ensemble is to perform it one node at a time. Remember:

- You must maintain a quorum of voter nodes during maintenance to ensure the ZooKeeper ensemble stays functional

- Taking down an observer node does not affect the quorum or the ability to elect a leader

- The quorum is calculated across all ZooKeeper nodes in all Data Centers

- Proceed with maintenance to the next server after the prior server is operational

- Use the nc command to inspect the ZooKeeper node