You're viewing Apigee Edge documentation.

Go to the

Apigee X documentation. info

Edge Microgateway v. 3.3.x

Apigee Edge Microgateway is a secure, HTTP-based message processor for APIs. Its main job is to process requests and responses to and from backend services securely while asynchronously pushing valuable API execution data to Apigee Edge where it is consumed by the Edge Analytics system. Edge Microgateway is easy to install and deploy -- you can have an instance up and running within minutes.

Typically, Edge Microgateway is installed within a trusted network in close proximity to backend target services. It provides enterprise-grade security, and some key plugin features such as spike arrest, quota, and analytics, but not the full capabilities or footprint of Apigee Edge. You can install Edge Microgateway in the same data center or even on the same machine as your backend services if you wish.

You can run Edge Microgateway as a standalone process or run it in a Docker container. See Using Docker with Edge Microgateway. You can also use Edge Microgateway to provide Apigee API management for services running in a Kubernetes cluster. Whether you are migrating existing services and APIs to the Kubernetes stack or are creating new services and APIs, Edge Microgateway helps provide a clean API management experience that includes security, traffic management, analytics, publishing, and more. See Integrate Edge Microgateway with Kubernetes.

Typical use cases

Typical use cases for a hybrid Cloud API management solution such as Edge Microgateway include:

- Reduce latency of API traffic for services that run in close proximity. For example, if your API consumers and producers are in close proximity, you do not necessarily want APIs to go through a central gateway.

- Keep API traffic within the enterprise-approved boundaries for security or compliance purposes.

- Continue processing messages if internet connection is temporarily lost.

- Provide Apigee API management for services running in a Kubernetes cluster. See Integrate Edge Microgateway with Kubernetes.

For additional use cases, see this Apigee Community article.

Key features and benefits

| Feature | Benefits |

|---|---|

| Security | Edge Microgateway authenticates requests with a signed access token or API key issued to each client app by Apigee Edge. |

| Rapid deployment | Unlike a full deployment of Apigee Edge, you can deploy and run an instance of Edge Microgateway within a matter of minutes. |

| Network proximity | You can install and manage Edge Microgateway in the same machine, subnet, or data center as the backend target APIs with which Edge Microgateway interacts. |

| Analytics | Edge Microgateway asynchronously delivers API execution data to Apigee Edge, where it is processed by the Edge Analytics system. You can use the full suite of Edge Analytics metrics, dashboards, and APIs. |

| Reduced latency | All communication with Apigee Edge is asynchronous and does not happen as part of processing client API requests. This allows Edge Microgateway to collect API data and send it to Apigee Edge without affecting latency. |

| Familiarity | Edge Microgateway uses and interacts with Apigee Edge features that Edge administrators already understand well, such as proxies, products, and developer apps. |

| Configuration | No programming is required to set up and manage Edge Microgateway. Everything is handled through configuration. |

| Convenience | You can integrate Edge Microgateway with your existing application monitoring and management infrastructure and processes. Note that Apigee API Monitoring is not supported for Edge Microgateway. | Logging | Log files detail all the normal and exceptional events encountered during API processing by Edge Microgateway. |

| CLI | A command-line interface lets you start, stop, and restart Edge Microgateway, extract operating statistics, view log files, request access tokens, and more. |

What you need to know about Edge Microgateway

This section describes how Edge Microgateway works, its basic architecture, configuration, and deployment.

Why use Edge Microgateway?

Moving the API management component close to backend target applications can reduce network latency. While you can install Apigee Edge on-premises in a private cloud, a full deployment of Apigee Edge is necessarily large and complex to support its full feature-set and data-heavy features like key management, monetization, and analytics. This means that deploying Apigee Edge on premises in each data-center is not always desirable.

With Edge Microgateway, you get a relatively small footprint application running close to your backend applications. And, you get to leverage full Apigee Edge for analytics, security, and other features.

Sample deployment scenarios

This section illustrates several possible deployment scenarios for Edge Microgateway.

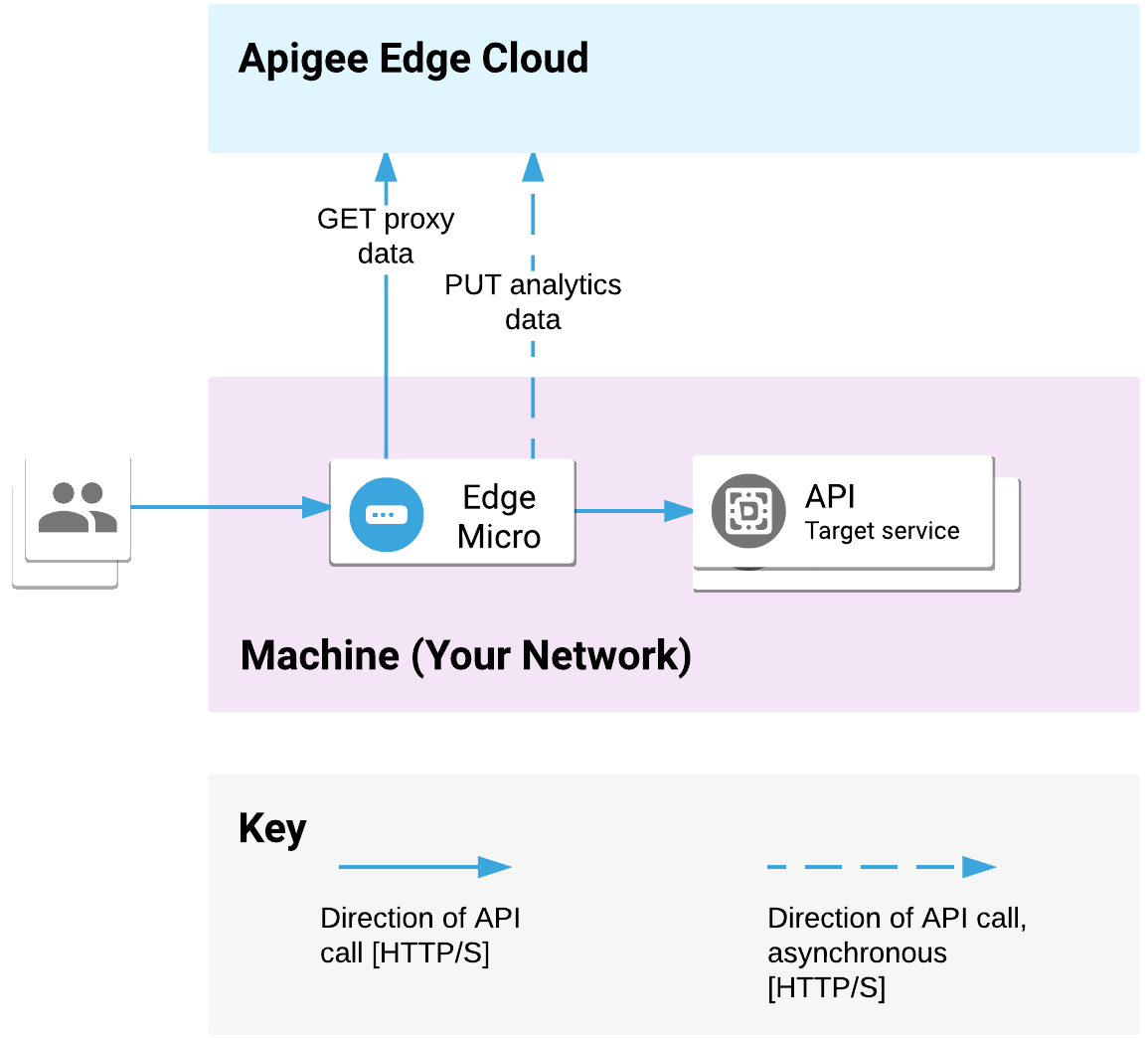

Same machine

Figure 1 shows the request processing path when Edge Microgateway is deployed in its most simple possible configuration, where Edge Microgateway and the backend target APIs are installed on the same machine. A single Edge Microgateway instance can be used to front multiple backend target applications

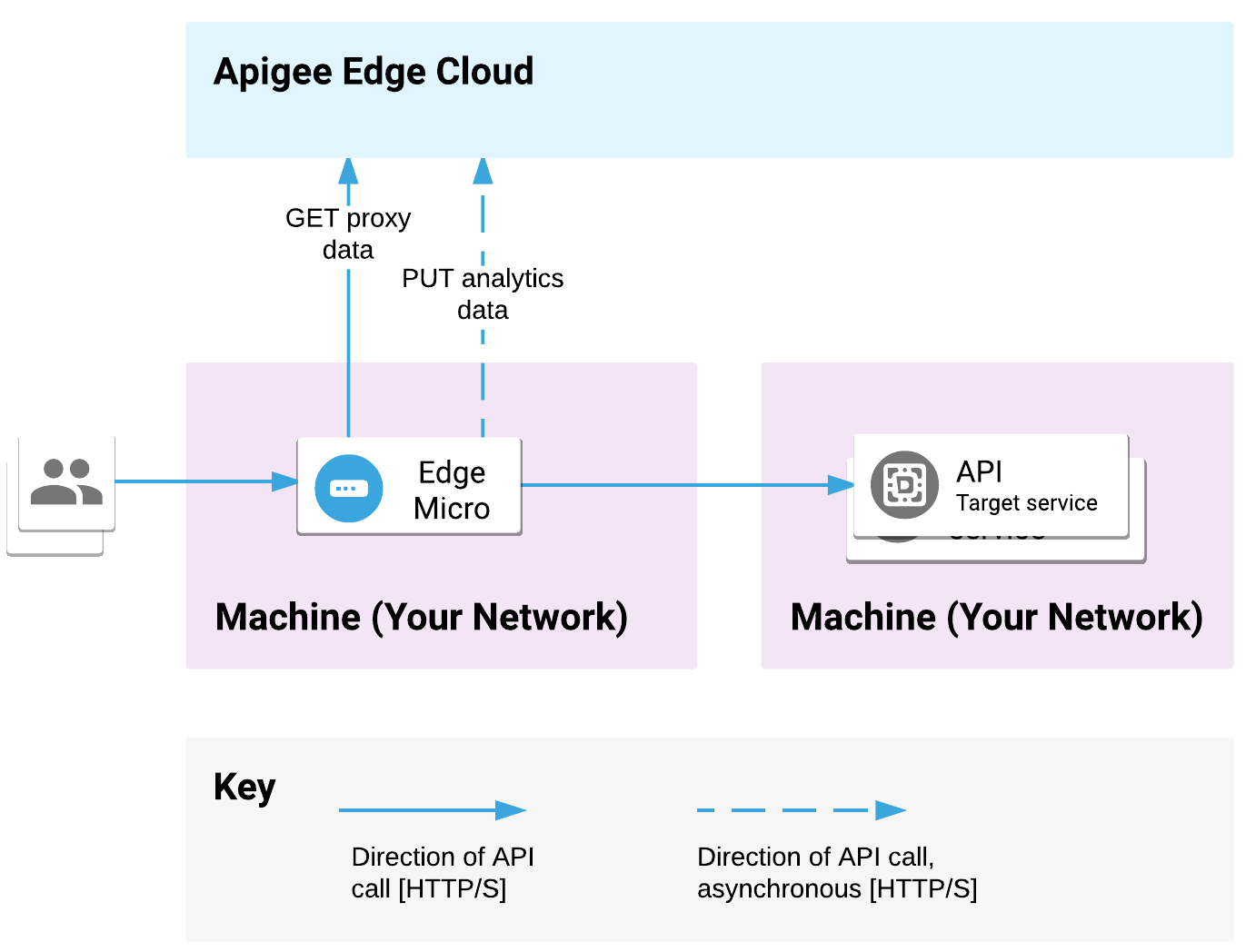

Different machine

Because all the communication between clients, Edge Microgateway, and backend API implementations is HTTP, you can install Apigee Edge Microgateway on a different machine from the API implementation, as shown in Figure 2.

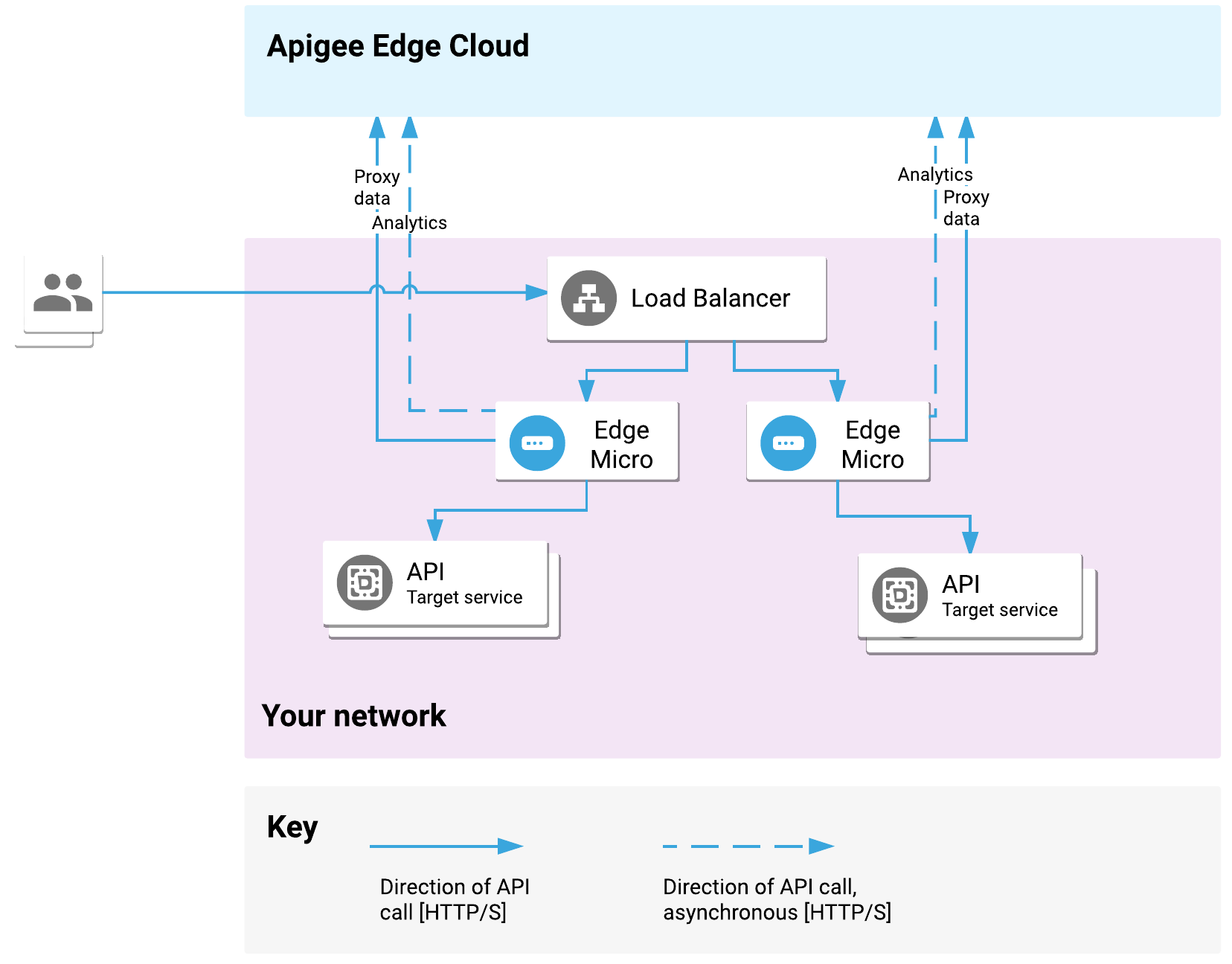

With load balancer

Edge Microgateway itself can be front-ended by a standard reverse proxy or load-balancer for SSL termination and/or load-balancing, as shown in Figure 3.

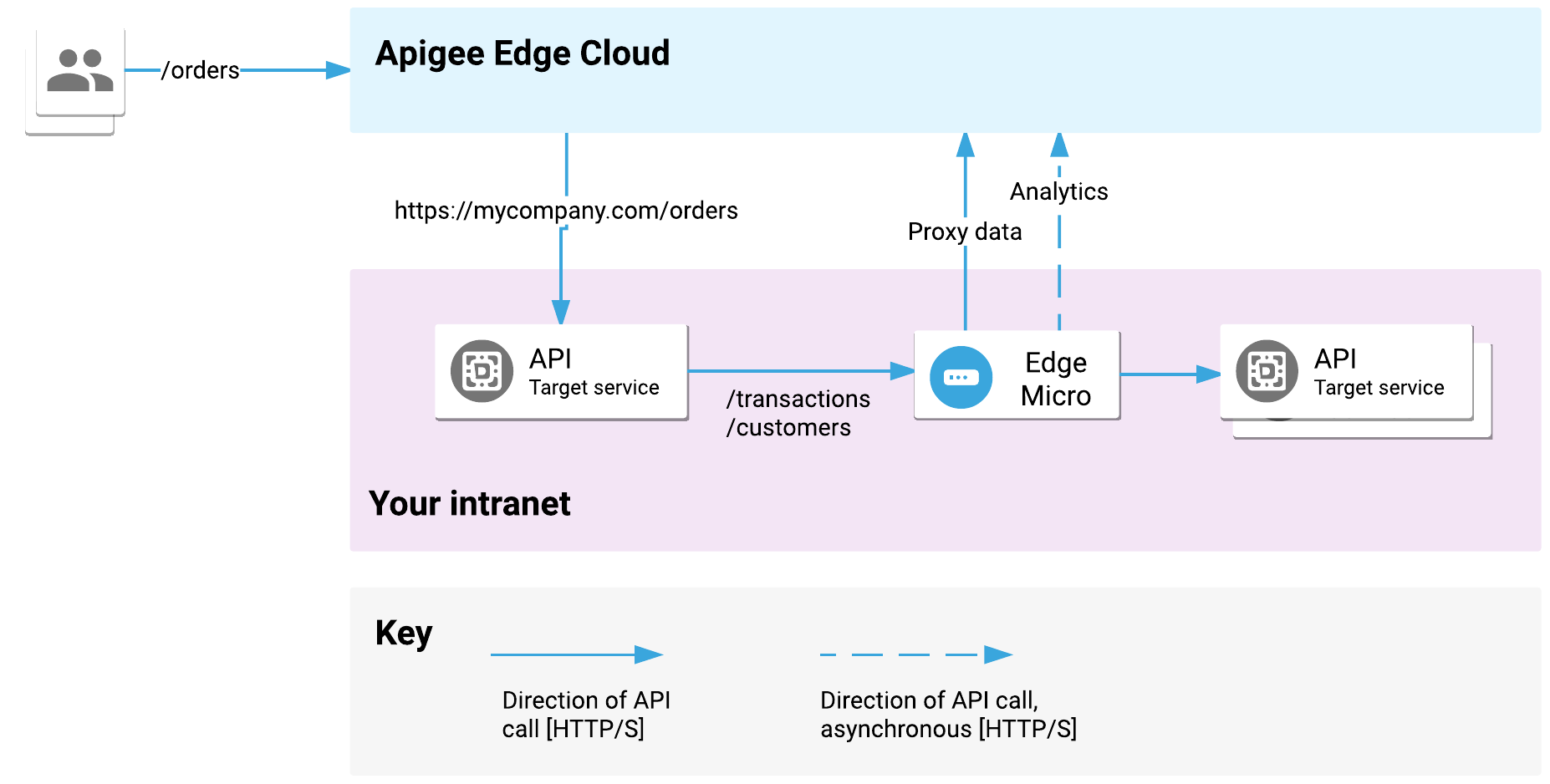

Intranet deployment

Use Edge Microgateway to protect intranet traffic while protecting internet traffic with

Apigee Edge, as illustrated in Figure 4. Let's say an API endpoint /orders is

proxied through Apigee Edge Cloud, and it hits the backend target

https://mycompany.com/orders. This is represented by the target API implementation

on the left. This API may then call multiple API endpoints represented by the target

implementation on the right. For example, it may call internally /customers and

/transactions. See also

this post on the Apigee Community.

Dependency on Apigee Edge

Edge Microgateway depends on and interacts with Apigee Edge. Edge Microgateway must communicate with Apigee Edge to function properly. The primary ways that Edge Microgateway interacts with Edge are:

- Upon startup, Edge Microgateway obtains a list of special "Edge Microgateway-aware" proxies and a list of all of the API products from your Apigee Edge organization. For each incoming client request, Edge Microgateway determines if the request matches one of these API proxies and then validates the incoming access token or API key based on the keys in the API product associated with that proxy.

- The Apigee Edge Analytics system stores and processes API data sent asynchronously from Edge Microgateway.

- Apigee Edge provides credentials used to sign access tokens or provide API keys that are required by clients making API calls through Edge Microgateway. You can obtain these tokens using a CLI command provided with Edge Microgateway.

One-time configuration

You must initially configure Edge Microgateway to be able to communicate with your Apigee Edge organization. On startup, Edge Microgateway initiates a bootstrapping operation with Apigee Edge. Edge Microgateway retrieves from Apigee Edge the information it requires to process API calls on its own, including the list of Edge Microgateway-aware proxies that are deployed on Apigee Edge. We'll talk more about these proxies shortly.

Edge Microgateway does not have to be co-located with Apigee Edge; Apigee Edge public and private cloud offerings work equally well.

What you need to know about Edge Microgateway-aware proxies

Edge Microgateway-aware proxies provide Edge Microgateway with certain information that allows it to process client API requests. Information about these proxies is downloaded from Apigee Edge to Edge Microgateway when Edge Microgateway starts up.

It is up to you or your API team to create these proxies on Apigee Edge using the Apigee Edge management UI or through other means if you wish. It's easy to do, and we walk through the details in the Setting up and configuring Edge Microgateway.

The characteristics of Edge Microgateway-aware proxies are:

- They provide Edge Microgateway with two key pieces of information: a base path and target URL.

- They must point to HTTP target endpoints. The backend target cannot be a Node.js app referenced by a ScriptTarget element in the TargetEndpoint definition. See the preceding note for more information.

- The proxy names must be prefixed with

edgemicro_. For example:edgemicro_weather. - You can't add policies or conditional flows to these proxies. If you try, they are ignored. Otherwise, Edge Microgateway-aware proxies appear in the Edge Management UI the same as any other API proxy on Edge.

- They can be bundled into products and associated with developer apps.

- Traffic data shows up in Edge Analytics.

- They cannot be traced using the Apigee Edge Trace tool.

About Edge Microgateway and Apigee Edge Analytics

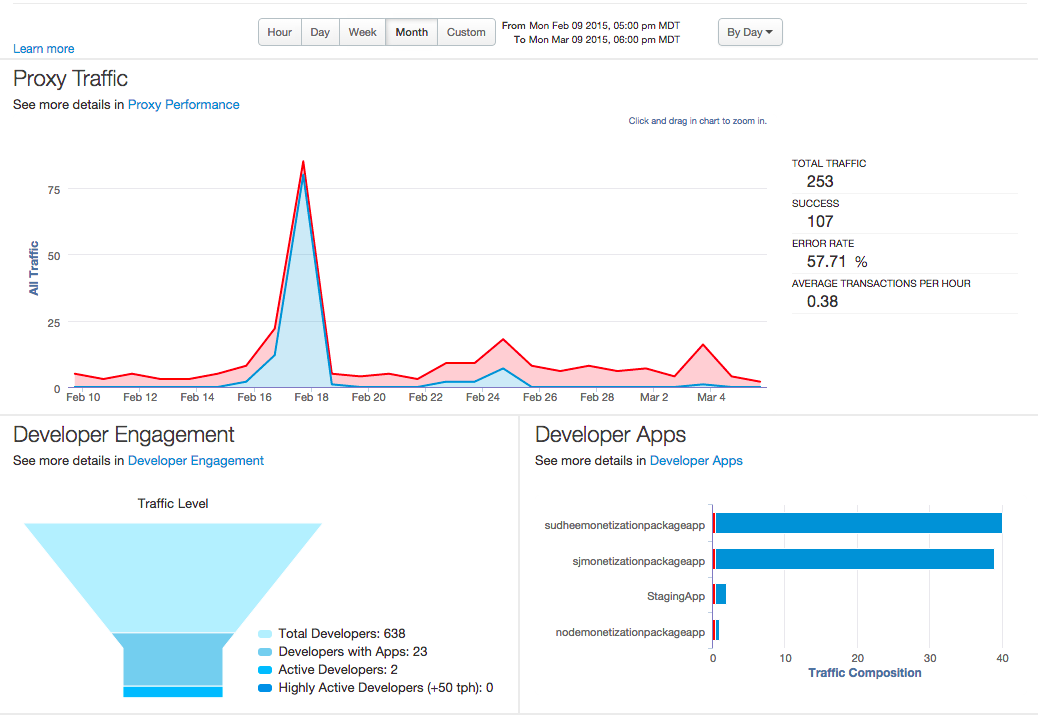

As API traffic flows through Edge Microgateway, Edge Microgateway buffers and asynchronously sends API execution data to Apigee Edge, where the data is stored and processed by the Edge Analytics system. This asynchronous communication allows Edge Microgateway to take advantage of Edge analytics features, while maintaining a relatively small footprint with minimal processing overhead or blocking. The full suite of Edge Analytics dashboards and custom reporting capabilities are available to you and your team to analyze traffic that passes through Edge Microgateway.

For more information about Edge Analytics, see Analytics dashboards.

About Edge Microgateway security

The role of Apigee Edge

As mentioned previously, Apigee Edge plays a role in securing all client requests to Edge Microgateway. The primary roles Apigee Edge plays are:

- Providing client credentials used as API keys or to generate valid access tokens used by clients to make secure API calls through Edge Microgateway.

- Providing credentials that Edge Microgateway needs to send API execution data to the Apigee Edge Analytics system. These credentials are obtained once by Edge Microgateway during the initial setup steps.

- Providing the platform for bundling API resources into products, registering and managing developers, and creating and managing developer apps.

Client app authentication

The Edge Microgateway supports client authentication through access tokens and API keys. Security keys and tokens are generated by Apigee Edge and validated by Edge Microgateway for each API call. If the OAuth plugin is enabled, Edge Microgateway checks a signed access token or API key and if it's valid, the API call proceeds to the backend target. If it isn't valid, an error is returned.

See Setting up and configuring Edge Microgateway for steps needed to obtain and use access tokens and API keys.

Authentication of Edge Microgateway on Apigee Edge

The asynchronous calls Edge Microgateway makes to update analytics data on Apigee Edge require authentication. This authentication is provided through a public/secret key pair passed to Edge Microgateway through the CLI or using environment variables. You obtain and use these keys once when you first install and start up Edge Microgateway.

API product management platform

Edge serves as a platform for bundling API resources into products, registering and managing developers, and creating and managing developer apps. For example, just as you can create and bundle entities like products and developer apps for regular Apigee Edge proxies, you can do exactly the same for Edge Microgateway proxies. API-level security is made possible by generating public and private security keys for each "bundle." This mechanism is identical to the way API security works on Apigee Edge.

Can I move my existing Edge proxy implementations to Edge Microgateway?

You cannot migrate existing proxies with associated policies or conditional flows to Edge Microgateway. Edge Microgateway requires you to create new "microgateway-aware" proxies. These proxies must be named with a special prefix, edgemicro_. Upon startup, Edge Microgateway discovers these edgemicro_* proxies and downloads configuration information for each of them. This information includes their target URLs and resource paths. From that point on, the proxies are not used. Any policies or conditional flows in these proxies will never execute.

Another reason for having microgateway-aware proxies is that Edge Microgateway asynchronously pushes analytics data to Edge for each microgateway-aware proxy. You can then view the analytics data for microgateway-aware proxies just as you would for any other proxy in the Edge Analytics UI.

The setup topic walks you through all the steps you need to do to begin proxying API calls through Edge Microgateway, including a few simple steps you need to do on Apigee Edge to set up a configuration that Edge Microgateway needs, including creation of microgateway-aware proxies. See Setting up and configuring Edge Microgateway.

Learn more about Edge Microgateway

Apigee provides these resources:

-

Edge Microgateway Documentation - The docs include an install guide and getting started tutorial, as well as complete reference and configuration information.

-

Videos - The Apigee Four Minute Videos for Developers series includes a suite of episodes on Edge Microgateway.

-

The Apigee Community is a great place to ask questions and benefit from questions asked, and answered, by others.