You're viewing Apigee Edge documentation.

Go to the

Apigee X documentation. info

Symptom

The client application gets an HTTP status code of 502 with the message

Bad Gateway as a response for API calls.

The HTTP status code 502 means that the client is not receiving a valid response from the

backend servers that should actually fulfill the request.

Error messages

Client application gets the following response code:

HTTP/1.1 502 Bad Gateway

In addition, you may observe the following error message:

{

"fault": {

"faultstring": "Unexpected EOF at target",

"detail": {

"errorcode": "messaging.adaptors.http.UnexpectedEOFAtTarget"

}

}

}Possible causes

One of the typical causes for 502 Bad Gateway Error is the Unexpected EOF

error, which can be caused by the following reasons:

| Cause | Details | Steps given for |

|---|---|---|

| Incorrectly configured target server | Target server is not properly configured to support TLS/SSL connections. | Edge Public and Private Cloud users |

| EOFException from Backend Server | The backend server may send EOF abruptly. | Edge Private Cloud users only |

| Incorrectly configured keep alive timeout | Keep alive timeouts configured incorrectly on Apigee and backend server. | Edge Public and Private Cloud users |

Common diagnosis steps

To diagnose the error, you can use any of the following methods:

API Monitoring

To diagnose the error using API Monitoring:

Using API Monitoring you can investigate

the 502 errors, by following the steps as explained in

Investigate issues. That is:

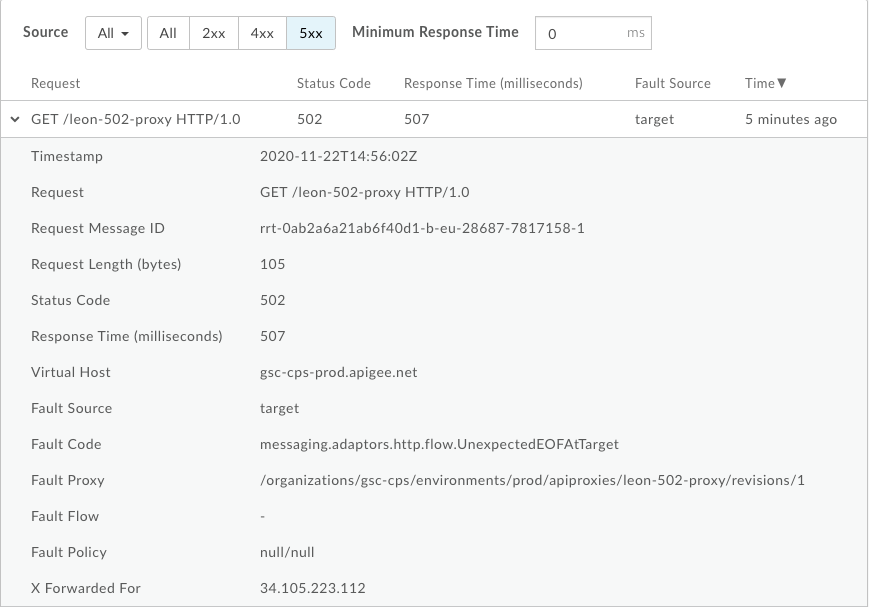

- Go to the Investigate dashboard.

- Select the Status Code in the drop down menu and ensure that the right time

period is selected when the

502errors occurred. - Click the box in the matrix when you are seeing a high number of

502errors. - On the right side, click View Logs for the

502errors which would look something like the following: - Fault Source is

target - Fault Code is

messaging.adaptors.http.UnexpectedEOFAtTarget

Here we can see the following information:

This indicates that the 502 error is caused by the target due to unexpected EOF.

In addition, make a note of the Request Message ID for the 502 error for further

investigation.

Trace tool

To diagnose the error using the Trace tool:

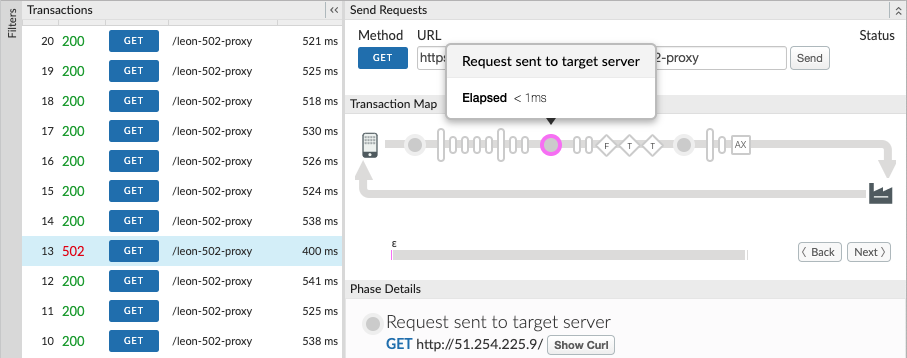

- Enable the

trace session, and make the API call to reproduce the issue

502 Bad Gateway. - Select one of the failing requests and examine the trace.

- Navigate through the various phases of the trace and locate where the failure occurred.

-

You should see the failure after the request has been sent to the target server as shown below:

-

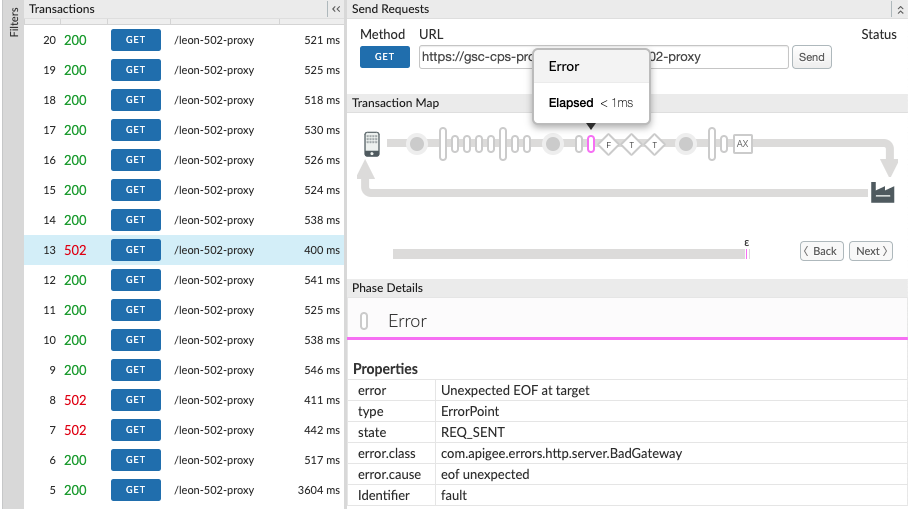

Determine the value of X-Apigee.fault-source and X-Apigee.fault-code in the AX (Analytics Data Recorded) Phase in the trace.

If the values of X-Apigee.fault-source and X-Apigee.fault-code match the values shown in the following table, you can confirm that the

502error is coming from the target server:Response headers Value X-Apigee.fault-source targetX-Apigee.fault-code messaging.adaptors.http.flow.UnexpectedEOFAtTargetIn addition, make a note of the

X-Apigee.Message-IDfor the502error for further investigation.

NGINX access logs

To diagnose the error using NGINX:

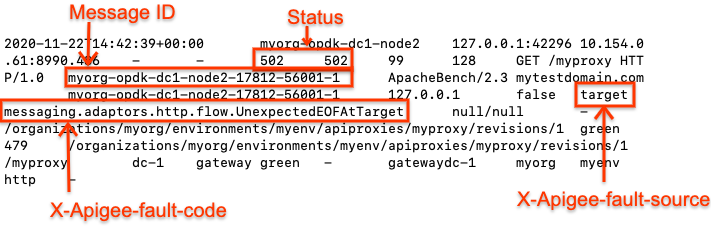

You can also refer to the NGINX access logs to determine the cause of the 502 status

code. This is particularly useful if the issue has occurred in the past or if the issue is

intermittent and you are unable to capture the trace in the UI. Use the following steps to

determine this information from the NGINX access logs:

- Check the NGINX access logs.

/opt/apigee/var/log/edge-router/nginx/ORG~ENV.PORT#_access_log - Search for any

502errors for the specific API proxy during a specific duration (if the problem happened in the past) or for any requests still failing with502. - If there are any

502errors, then check if the error is caused by the target sending anUnexpected EOF. If the values of X-Apigee.fault-source and X- Apigee.fault-code match the values shown in the table below, the502error is caused by the target unexpectedly closing the connection:Response Headers Value X-Apigee.fault-source targetX-Apigee.fault-code messaging.adaptors.http.flow.UnexpectedEOFAtTargetHere's a sample entry showing the

502error caused by the target server:

In addition, make a note of the message IDs for the 502 errors for further investigation.

Cause: Incorrectly configured target server

Target server is not properly configured to support TLS/SSL connections.

Diagnosis

- Use API Monitoring, Trace tool, or

NGINX access logs to determine the message ID,

fault code, and fault source for the

502error. - Enable the trace in the UI for the affected API.

- If the trace for the failing API request shows the following:

- The

502 Bad Gatewayerror is seen as soon as the target flow request started. - The

error.classdisplaysmessaging.adaptors.http.UnexpectedEOF.Then it is very likely that this issue is caused by an incorrect target server configuration.

- The

- Get the target server definition using the Edge management API call:

- If you are a Public Cloud user, use this API:

curl -v https://api.enterprise.apigee.com/v1/organizations/<orgname>/environments/<envname>/targetservers/<targetservername> -u <username>

- If you are a Private Cloud user, use this API:

curl -v http://<management-server-host>:<port #>/v1/organizations/<orgname>/environments/<envname>/targetservers/<targetservername> -u <username>

Sample faulty

TargetServerdefinition:<TargetServer name="target1"> <Host>mocktarget.apigee.net</Host> <Port>443</Port> <IsEnabled>true</IsEnabled> </TargetServer >

- If you are a Public Cloud user, use this API:

-

The illustrated

TargetServerdefinition is an example for one of the typical misconfigurations which is explained as follows:Let's assume that the target server

mocktarget.apigee.netis configured to accept secure (HTTPS) connections on port443. However, if you look at the target server definition, there are no other attributes/flags that indicate that it is meant for secure connections. This causes Edge to treat the API requests going to the specific target server as HTTP (non-secure) requests. So Edge will not initiate the SSL Handshake process with this target server.Since the target server is configured to accept only HTTPS (SSL) requests on

443, it will reject the request from Edge or close the connection. As a result, you get anUnexpectedEOFAtTargeterror on the Message Processor. The Message Processor will send502 Bad Gatewayas a response to the client.

Resolution

Always ensure that the target server is configured correctly as per your requirements.

For the illustrated example above, if you want to make requests to a secure (HTTPS/SSL) target

server, you need to include the SSLInfo attributes with the enabled flag set

to true. While it is allowed to add the SSLInfo attributes for a target server in the target

endpoint definition itself, it is recommended to add the SSLInfo attributes as part of the target

server definition to avoid any confusion.

- If the backend service requires one-way SSL communication, then:

- You need to enable the TLS/SSL in the

TargetServerdefinition by including theSSLInfoattributes where theenabledflag is set to true as shown below:<TargetServer name="mocktarget"> <Host>mocktarget.apigee.net</Host> <Port>443</Port> <IsEnabled>true</IsEnabled> <SSLInfo> <Enabled>true</Enabled> </SSLInfo> </TargetServer> - If you want to validate the target server's certificate in Edge, then we also need to

include the truststore (containing the target server's certificate) as shown below:

<TargetServer name="mocktarget"> <Host>mocktarget.apigee.net</Host> <Port>443</Port> <IsEnabled>true</IsEnabled> <SSLInfo> <Ciphers/> <ClientAuthEnabled>false</ClientAuthEnabled> <Enabled>true</Enabled> <IgnoreValidationErrors>false</IgnoreValidationErrors> <Protocols/> <TrustStore>mocktarget-truststore</TrustStore> </SSLInfo> </TargetServer>

- You need to enable the TLS/SSL in the

- If the backend service requires two-way SSL communication, then:

- You need to have

SSLInfoattributes withClientAuthEnabled,Keystore,KeyAlias, andTruststoreflags set appropriately, as shown below:<TargetServer name="mocktarget"> <IsEnabled>true</IsEnabled> <Host>www.example.com</Host> <Port>443</Port> <SSLInfo> <Ciphers/> <ClientAuthEnabled>true</ClientAuthEnabled> <Enabled>true</Enabled> <IgnoreValidationErrors>false</IgnoreValidationErrors> <KeyAlias>keystore-alias</KeyAlias> <KeyStore>keystore-name</KeyStore> <Protocols/> <TrustStore>truststore-name</TrustStore> </SSLInfo> </TargetServer >

- You need to have

References

Load balancing across backend servers

Cause: EOFException from the backend server

The backend server may send EOF (End of File) abruptly.

Diagnosis

- Use API Monitoring, Trace tool, or

NGINX access logs to determine the message ID,

fault code, and fault source for the

502error. - Check the Message Processor logs

(

/opt/apigee/var/log/edge-message-processor/logs/system.log) and search to see if you haveeof unexpectedfor the specific API or if you have the uniquemessageidfor the API request, then you can search for it.Sample exception stack trace from Message Processor log

"message": "org:myorg env:test api:api-v1 rev:10 messageid:rrt-1-14707-63403485-19 NIOThread@0 ERROR HTTP.CLIENT - HTTPClient$Context$3.onException() : SSLClientChannel[C:193.35.250.192:8443 Remote host:0.0.0.0:50100]@459069 useCount=6 bytesRead=0 bytesWritten=755 age=40107ms lastIO=12832ms .onExceptionRead exception: {} java.io.EOFException: eof unexpected at com.apigee.nio.channels.PatternInputChannel.doRead(PatternInputChannel.java:45) ~[nio-1.0.0.jar:na] at com.apigee.nio.channels.InputChannel.read(InputChannel.java:103) ~[nio-1.0.0.jar:na] at com.apigee.protocol.http.io.MessageReader.onRead(MessageReader.java:79) ~[http-1.0.0.jar:na] at com.apigee.nio.channels.DefaultNIOSupport$DefaultIOChannelHandler.onIO(NIOSupport.java:51) [nio-1.0.0.jar:na] at com.apigee.nio.handlers.NIOThread.run(NIOThread.java:123) [nio-1.0.0.jar:na]"

In the above example, you can see that the

java.io.EOFException: eof unexpectederror occurred while Message Processor is trying to read a response from the backend server. This exception indicates the end of file (EOF), or the end of stream has been reached unexpectedly.That is, the Message Processor sent the API request to the backend server and was waiting or reading the response. However, the backend server terminated the connection abruptly before the Message Processor got the response or could read the complete response.

- Check your backend server logs and see if there are any errors or information that could have led the backend server to terminate the connection abruptly. If you find any errors/information, then go to Resolution and fix the issue appropriately in your backend server.

- If you don't find any errors or information in your backend server, collect the

tcpdumpoutput on the Message Processors:- If your backend server host has a single IP address then use the following command:

tcpdump -i any -s 0 host IP_ADDRESS -w FILE_NAME

- If your backend server host has multiple IP addresses, then use the following command:

tcpdump -i any -s 0 host HOSTNAME -w FILE_NAME

Typically, this error is caused because the backend server responds back with

[FIN,ACK]as soon as the Message Processor sends the request to the backend server.

- If your backend server host has a single IP address then use the following command:

-

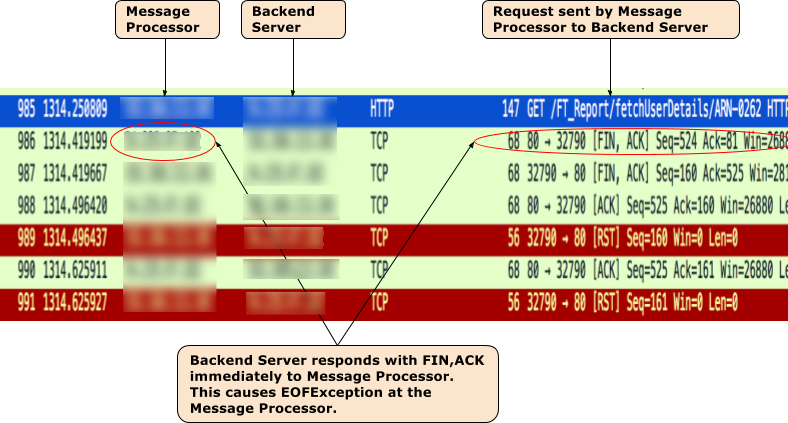

Consider the following

tcpdumpexample.Sample

tcpdumptaken when502 Bad Gateway Error(UnexpectedEOFAtTarget) occurred

- From the TCPDump output, you notice the following sequence of events:

- In packet

985, the Message Processor sends the API request to the backend server. - In packet

986, the backend server immediately responds back with[FIN,ACK]. - In packet

987, the Message Processor responds with[FIN,ACK]to the backend server. - Eventually the connections are closed with

[ACK]and[RST]from both the sides. - Since the backend server sends

[FIN,ACK], you get the exceptionjava.io.EOFException: eof unexpectedexception on the Message Processor.

- In packet

- This can happen if there's a network issue at the backend server. Engage your network operations team to investigate this issue further.

Resolution

Fix the issue on the backend server appropriately.

If the issue persists and you need assistance troubleshooting 502 Bad Gateway Error or you

suspect that it's an issue within Edge, contact Apigee Edge Support.

Cause: Incorrectly configured keep alive timeout

Before you diagnose if this is the cause for the 502 errors, please read through

the following concepts.

Persistent connections in Apigee

Apigee by default (and in following with the HTTP/1.1 standard) uses persistent connections

when communicating with the target backend server. Persistent connections can increase performance

by allowing an already established TCP and (if applicable) TLS/SSL connection to be re-used, which

reduces latency overheads. The duration for which a connection needs to be persisted is controlled

through a property keep alive timeout (keepalive.timeout.millis).

Both the backend server and the Apigee Message Processor use keep alive timeouts to keep connections open with one another. Once no data is received within the keep alive timeout duration, the backend server or Message Processor can close the connection with the other.

API Proxies deployed to a Message Processor in Apigee, by default, have a keep alive timeout set to

60s unless overridden. Once no data is received for 60s, Apigee will

close the connection with the backend server. The backend server will also maintain a keep alive timeout,

and once this expires the backend server will close the connection with the Message Processor.

Implication of incorrect keep alive timeout configuration

If either Apigee or the backend server is configured with incorrect keep alive timeouts, then it

results in a race condition which causes the backend server to send an unexpected End Of File

(FIN) in response to a request for a resource.

For example, if the keep alive timeout is configured within the API Proxy or the Message

Processor with a value greater than or equal to the timeout of the upstream backend server, then

the following race condition can occur. That is, if the Message Processor does not receive any

data until very close to the threshold of the backend server keep alive timeout, then a request

comes through and is sent to the backend server using the existing connection. This can lead to

502 Bad Gateway due to Unexpected EOF error as explained below:

- Let’s say the keep alive timeout set on both the Message Processor and the backend server is 60 seconds and no new request came until 59 seconds after the previous request was served by the specific Message Processor.

- The Message Processor goes ahead and processes the request that came in at the 59th second using the existing connection (as the keep alive timeout has not yet elapsed) and sends the request to the backend server.

- However, before the request arrives at the backend server the keep alive timeout threshold has since been exceeded on the backend server.

- The Message Processor's request for a resource is in-flight, but the backend server

attempts to close the connection by sending a

FINpacket to the Message Processor. - While the Message Processor is waiting for the data to be received, it instead receives

the unexpected

FIN, and the connection is terminated. - This results in an

Unexpected EOFand subsequently a502is returned to the client by the Message Processor.

In this case, we observed the 502 error occurred because the same keep alive timeout

value of 60 seconds was configured on both the Message Processor and backend server. Similarly,

this issue can also happen if a higher value is configured for keep alive timeout on the Message

Processor than on the backend server.

Diagnosis

- If you are a Public Cloud user:

- Use API Monitoring or Trace tool (as explained in

Common diagnosis steps) and verify that you have both of the following

settings:

- Fault code:

messaging.adaptors.http.flow.UnexpectedEOFAtTarget - Fault source:

target

- Fault code:

- Go to Using tcpdump for further investigation.

- Use API Monitoring or Trace tool (as explained in

Common diagnosis steps) and verify that you have both of the following

settings:

- If you are a Private Cloud user:

- Use Trace tool or

NGINX access logs to determine the message ID,

fault code, and fault source for the

502error. - Search for the message ID in the Message Processor log

(/opt/apigee/var/log/edge-message-processor/logs/system.log). - You will see the

java.io.EOFEXception: eof unexpectedas shown below:2020-11-22 14:42:39,917 org:myorg env:prod api:myproxy rev:1 messageid:myorg-opdk-dc1-node2-17812-56001-1 NIOThread@1 ERROR HTTP.CLIENT - HTTPClient$Context$3.onException() : ClientChannel[Connected: Remote:51.254.225.9:80 Local:10.154.0.61:35326]@12972 useCount=7 bytesRead=0 bytesWritten=159 age=7872ms lastIO=479ms isOpen=true.onExceptionRead exception: {} java.io.EOFException: eof unexpected at com.apigee.nio.channels.PatternInputChannel.doRead(PatternInputChannel.java:45) at com.apigee.nio.channels.InputChannel.read(InputChannel.java:103) at com.apigee.protocol.http.io.MessageReader.onRead(MessageReader.java:80) at com.apigee.nio.channels.DefaultNIOSupport$DefaultIOChannelHandler.onIO(NIOSupport.java:51) at com.apigee.nio.handlers.NIOThread.run(NIOThread.java:220)

- The error

java.io.EOFException: eof unexpectedindicates that the Message Processor received anEOFwhile it was still waiting to read a response from the backend server. - The attribute

useCount=7in the above error message indicates that the Message Processor had re-used this connection about seven times and the attributebytesWritten=159indicates that the Message Processor had sent the request payload of159bytes to the backend server. However it received zero bytes back when the unexpectedEOFoccurred. -

This shows that the Message Processor had re-used the same connection multiple times, and on this occasion it sent data but shortly afterwards received an

EOFbefore any data was received. This means there is a high probability that the backend server's keep alive timeout is shorter or equal to that set in the API proxy.You can investigate further with the help of

tcpdumpas explained below.

- Use Trace tool or

NGINX access logs to determine the message ID,

fault code, and fault source for the

Using tcpdump

- Capture a

tcpdumpon the backend server with the following command:tcpdump -i any -s 0 host MP_IP_Address -w File_Name

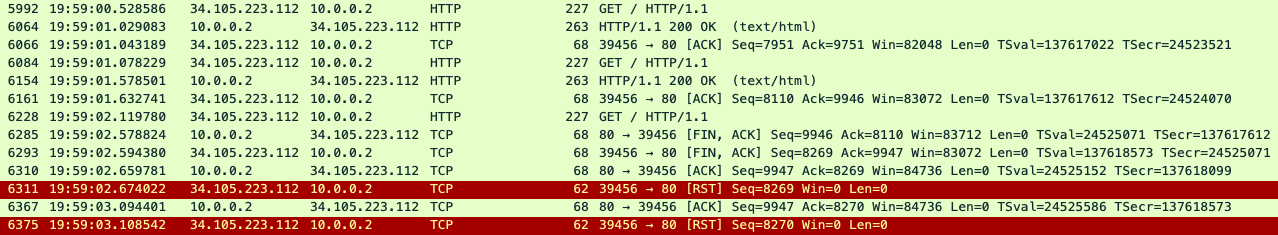

- Analyze the

tcpdumpcaptured:Here's a sample tcpdump output:

In the above sample

tcpdump, you can see the following:- In packet

5992,the backend server received aGETrequest. - In packet

6064, it responds with200 OK. - In packet

6084, the backend server received anotherGETrequest. - In packet

6154, it responds with200 OK. - In packet

6228, the backend server received a thirdGETrequest. - This time, the backend server returns a

FIN, ACKto the Message Processor (packet6285) initiating the closure of the connection.

The same connection was re-used twice successfully in this example, but on the third request, the backend server initiates a closure of the connection, while the Message Processor is waiting for the data from the backend server. This suggests that the backend server's keep alive timeout is most likely shorter or equal to the value set in the API proxy. To validate this, see Compare keep alive timeout on Apigee and backend server.

- In packet

Compare keep alive timeout on Apigee and backend server

- By default, Apigee uses a value of 60 seconds for the keep alive timeout property.

-

However, it is possible that you may have overridden the default value in the API Proxy. You can verify this by checking the specific

TargetEndpointdefinition in the failing API Proxy that is giving502errors.Sample TargetEndpoint configuration:

<TargetEndpoint name="default"> <HTTPTargetConnection> <URL>https://mocktarget.apigee.net/json</URL> <Properties> <Property name="keepalive.timeout.millis">30000</Property> </Properties> </HTTPTargetConnection> </TargetEndpoint>In the above example, the keep alive timeout property is overridden with a value of 30 seconds (

30000milliseconds). - Next, check the keep alive timeout property configured on your backend server. Let’s say

your backend server is configured with a value of

25 seconds. - If you determine that the value of the keep alive timeout property on Apigee is higher

than the value of the keep alive timeout property on the backend server as in the above

example, then that is the cause for

502errors.

Resolution

Ensure that the keep alive timeout property is always lower on Apigee (in the API Proxy and Message Processor component) compared to that on the backend server.

- Determine the value set for the keep alive timeout on the backend server.

- Configure an appropriate value for the keep alive timeout property in the API Proxy or Message Processor, such that the keep alive timeout property is lower than the value set on the backend server, using the steps described in Configuring keep alive timeout on Message Processors.

If the problem still persists, go to Must gather diagnostic information.

Best Practice

It is strongly advised that the downstream components always have a lesser keep alive timeout

threshold than configured on the upstream servers to avoid these kinds of race conditions and

502 errors. Each downstream hop should be lower than each upstream hop. In Apigee

Edge, it is good practice to use the following guidelines:

- The client keep alive timeout should be less than the Edge Router keep alive timeout.

- The Edge Router keep alive timeout should be less than the Message Processor keep alive timeout.

- The Message Processor keep alive timeout should be less than the target server keep alive timeout.

- If you have any other hops in front of or behind Apigee, the same rule should be applied. You should always leave it as the responsibility of the downstream client to close the connection with the upstream.

Must gather diagnostic information

If the problem persists even after following the above instructions, gather the following diagnostic information, and then contact Apigee Edge Support.

If you are a Public Cloud user, provide the following information:

- Organization name

- Environment name

- API Proxy name

- Complete

curlcommand to reproduce the502error - Trace file containing the requests with

502 Bad Gateway - Unexpected EOFerror - If the

502errors are not occurring currently, provide the time period with the timezone information when502errors occurred in the past.

If you are a Private Cloud user, provide the following information:

- Complete error message observed for the failing requests

- Organization, Environment name and API Proxy name for which you are observing

502errors - API Proxy bundle

- Trace file containing the requests with

502 Bad Gateway - Unexpected EOFerror - NGINX access logs

/opt/apigee/var/log/edge-router/nginx/ORG~ENV.PORT#_access_log - Message Processor logs

/opt/apigee/var/log/edge-message-processor/logs/system.log - The time period with the timezone information when the

502errors occurred Tcpdumpsgathered on the Message Processors or the backend server, or both when the error occurred